1. Introduction

Intrapartum fetal heart rate monitoring:

Because it is likely to provide obstetricians with significant information related to the health status of the fetus during delivery, intrapartum fetal heart rate (FHR) monitoring is a routine procedure in hospitals. Notably, it is expected to permit detection of fetal acidosis, which may induce severe consequences for both the baby and the mother and thus requires a timely and relevant decision for rapid intervention and operative delivery [

1]. In daily clinical practice, FHR is mostly inspected visually, through training by clinical guidelines formalized by the International Federation of Gynecology and Obstetrics (FIGO) [

2,

3]. However, it has been well documented that such visual inspection is prone to severe inter-individual variability and even shows a substantial intra-individual variability [

4]. This reflects both that FHR temporal dynamics are complex and hard to assess and that FIGO criteria lead to a demanding evaluation, as they mix several aspects of FHR dynamics (baseline drift, decelerations, accelerations, long- and short-term variabilities). Difficulties in performing objective assessment of these criteria has led to a substantial number of unnecessary Caesarean sections [

5]. This has triggered a large amount of research world wide aiming both to compute in a reproducible and objective way the FIGO criteria [

2] and to devise new signal processing-inspired features to characterize FHR temporal dynamics (cf. [

6,

7] for reviews).

Related works:

After the seminal contribution in the analysis of heart rate variability (HRV) in adults [

8], spectrum estimation has been amongst the first signal processing tools that has been considered for computerized analysis of FHR, either constructed on models driven by characteristic time scales [

9,

10,

11] or the scale-free paradigm [

12,

13,

14]. Further, aiming to explore temporal dynamics beyond the mere temporal correlations, several variations of nonlinear analysis have been envisaged both for antepartum and intrapartum FHR [

15], based, e.g., on multifractal analysis [

13], scattering transforms [

16], phase-driven synchronous pattern averages [

17] or complexity and entropy measures [

18,

19,

20]. Interested readers are referred to e.g., [

6,

7] for overviews. There has also been several attempts to combine features different in nature by doing multivariate classification using supervised machine learning strategies (cf. e.g., [

6,

21,

22,

23,

24]).

Measures from complexity theory or information theory remain however amongst the most used tools to construct HRV characterization. They are defined independently from (deterministic) dynamical systems or from (random) stochastic process frameworks. The former led to standard references, both for adult and for antepartum and intrapartum fetal heart rate analysis: approximate entropy (ApEn) [

18,

25] and sample entropy (SampEn) [

26], which can be regarded as practical approximations to Kolmogorov–Sinai or Eckmann–Ruelle complexity measures. The stochastic process framework leads to the definitions of Shannon and Rényi entropies and entropy rates. Both worlds are connected by several relations, cf., e.g., [

27,

28] for reviews. Implementations of ApEn and SampEn rely on the correlation integral-based algorithm (CI) [

18,

29], while that of Shannon entropy rates may instead benefit from the

k-nearest neighbor (

k-NN) algorithm [

30], which brings robustness and improved performance to entropy estimation [

31,

32,

33].

Labor stages:

Automated FHR analysis is complicated by the existence of two distinct stages during labor. The dilatation stage (Stage 1) consists of progressive cervical dilatation and regular contractions. The active pushing stage (Stage 2) is characterized by a fully-dilated cervix and expulsive contractions. The most common approaches in FHR analysis consist of not distinguishing stages and performing a global analysis [

23,

34] or of focusing on Stage 1 only, as it is better documented and usually shows data with better quality, cf., e.g., [

24,

35]. Whether or not temporal dynamics associated with each stage are different has not been intensively explored yet (see a contrario [

36,

37]). However, recently, some contributions have started to conduct systematic comparisons [

38,

39].

Goals, contributions and outline:

The present contribution remains in the category of works aiming to design new efficient features for FHR, here based on advanced information theoretic concepts. These new tools are applied to a high quality, large (1404 subjects) and documented FHR database collected across years in an academic hospital in France and described in

Section 2. The database is split into two datasets associated each with one stage of labor, which enables us first to assess and compare acidosis detection performance achieved by the proposed features independently at each stage and second to address differences in FHR temporal dynamics between the two stages. Reexamining formally the definitions of entropy rates in information theory,

Section 3.1 first establishes that they can be split into two components: Shannon entropy, which quantifies data static properties, and auto-mutual information (AMI), which characterizes temporal dynamics in data, combining both linear and nonlinear (or higher order) statistics. ApEn and SampEn, defined from complexity theory, are then explicitly related to entropy rates and, hence, to AMI (cf.

Section 3.3). Estimation procedures for Shannon entropy, entropy rate and AMI, based on

k-nearest neighbor (

k-NN) algorithms [

30], are compared to those of ApEn and SampEn, constructed on correlation integral algorithms [

18,

29,

40]. Acidosis detection performances are reported in

Section 4.1. Results are discussed in terms of quality versus analysis window size,

k-NN or correlation integral-based procedures and differences between Stages 1 and 2. Further, a longitudinal study consisting of sliding analysis in overlapping windows across the delivery process permits showing that processes characterizing Stages 1 and 2 are different (

Section 4.4).

2. Datasets: Intrapartum Fetal Heart Rate Times Series and Labor Stages

Data collection:

Intrapartum fetal heart rate data were collected at the academic Femme-Mère-Enfant hospital, in Lyon, France, during daily routine monitoring across the years 2000 to 2010. They were recorded using STAN S21 or S31 devices with internal scalp electrodes at 12-bit resolution, 500-Hz sampling rate (STAN, Neoventa Medical, Sweden). Clinical information was provided by the obstetrician in charge, reporting delivery conditions, as well as the health status of the baby, notably the umbilical artery pH after delivery and the decision for intervention due to suspected acidosis [

41].

Datasets:

For the present study, subjects were included using criteria detailed in [

24,

41], leading to a total of 1404 tracings, lasting from 30 min to several hours. These criteria essentially aim to reject subjects with too low quality recording (e.g., too many missing data, too large gaps, too short recordings, etc.). As a result, for subjects in the database, the average fraction of data missing in the last 20 min before delivery is less than 5%. The first goal of the present work is to assess the relevance of new information theoretic measures; their robustness to poor quality data is postponed for future work.

The measurement of pH, performed by blood test immediately after delivery, is systematically documented and used as the ground-truth: When pH , the newborn is considered has having suffered from acidosis and is referred to as acidotic (A, pH ). Conversely, when pH , the newborn is considered not having suffered from acidosis during delivery and is termed normal (N, pH ). In order to have a meaningful pH indication, we retain only subjects for which the time between end of recording and birth is lower than or equal to 10 min.

Following the discussion above on labor stages, subjects are split into two different datasets. Dataset I consists of subjects for which delivery took place after a short Stage 2 (less than 15 min) or during Stage 1 (Stage 2 was absent). It contains 913 normal and 26 acidotic subjects. Dataset II gathers FHR for delivery that took place after more than 15 min of Stage 2. It contains 450 normal and 15 acidotic subjects.

Beats-per-minute time series and preprocessing:

For each subject, the collection device provides us with a digitalized list of RR-interarrivals in ms. In reference to common practice in FHR analysis and for the ease of comparisons amongst subjects, RR-interarrivals are converted into regularly-sampled beats-per-minute times series, by linear interpolation of the samples 36,000/. The sampling frequency has been set to Hz as FHR do not contain any relevant information above 3 Hz.

3. Methods

Outline:

We describe in this section the five features that we use to analyze heart rate signals. We propose to apply information theory, as defined by Shannon, to the analysis of cardiac signals. We do so by computing the Shannon entropy, the Shannon entropy rate and the auto-mutual information. The first section below is devoted to the definition of these quantities, which provides three features. The second section reports the definitions of two features rooted in complexity theory: approximate entropy (ApEn) and sample entropy (SampEn), which are classically used in cardiac signal analysis. Although we use them in practice only as benchmarks, we devote the last section to their relation with the new features we propose.

Information theory and complexity theory only differ in the nature of the objects under study. Information theory, on the one hand, aims to analyze random processes and defines functionals of probability densities. Complexity theory, on the other hand, aims to analyze signals produced by dynamical systems and assumes the existence of ergodic probability measures to describe the density of trajectories in phase space, so that they can be manipulated as probability densities. In this spirit, we consider throughout this paper the signals to analyze as random processes, although they indeed originate from a dynamical system.

Assumptions:

For the sake of simplicity in the description of the features and for practical use, we assume that signals are monovariate (unidimensional) and centered (zero mean) because the five features we use are independent of the first moment of the probability density function. We also assume that signals are stationary. Although this may seem at first a very strong assumption, it is very reasonable when examining time windows smaller than the natural time scale of the evolution between Stages 1 and 2, as we discuss in

Section 4.4, and larger than events such as contractions. Finally, we also assume that the signals contain

N points, sampled at a constant frequency. All estimates depend on

N via finite size effects. In the following, we do not mention this dependence explicitly in the notations and only compare features computed over the same window size.

Time-embedding:

Because we are interested in the dynamics of the signal, we use the delay-embedding procedure introduced by Takens [

42] in the context of dynamical systems. Its goal is to include time-correlations in the statistics. We construct, by sampling the initial process

X every

points in time, an

m-dimensional time-embedded process

as:

In practice, we have a finite number N of points in the time series, so there are well-defined embedded vectors.

3.1. Information Theory Features

We now briefly recall definitions from information theory introduced by Shannon [

43]. This paradigm aims to describe processes in terms of information, and it can be applied to any experimental signals.

3.1.1. Shannon and Rényi Entropies

Shannon entropy

of an

m-dimensional embedded process

is a functional of its joint probability density function

[

43]:

which does not depend on

t, thanks to the stationarity of the signal. Shannon entropy measures the total information contained in the process

. For embedding dimension

, it is independent of the sampling parameter

, and we write in the following

.

Rényi

q-order entropy

is defined as another functional of the probability density [

44]:

When

, the Rényi

q-order entropy converges to the Shannon entropy.

3.1.2. Entropy Rates

The Shannon entropy rate is defined as:

which can be shown to be equivalent to:

We define the

m-order Shannon entropy rate as measuring how much the Shannon entropy increases between two consecutive time-embedding dimensions

m and

:

It quantifies the variation of the total information in the time-embedded process when the embedding dimension

m is increased by 1. Interpreting Equation (

6), it measures information in the

coordinates of

that is not contained in the

m coordinates of

. Following Equation (

7), it can also be interpreted as the new information brought by the extra sample

when the set of

m samples,

, is already known.

Rényi order-

q entropy rate and

m-order Rényi order-

q entropy rate can be defined in the same way, replacing Shannon entropy

H by Rényi order-

q entropy

in Equations (

4) and (

6), respectively. Nevertheless, it should be emphasized that Rényi order-

q entropy is lacking the chain rule of conditional probabilities as soon as

; therefore, Equation (

7) does not hold for Rényi order-

q entropy, unless

(Shannon entropy).

3.1.3. Mutual Information

The mutual information (MI) of two processes measures the information they share [

43]. MI is the Kullback–Leibler divergence [

45] between the joint probability function and the product of the marginals, which would express the joint probability function if the two processes were independent. For time-embedded processes

and

, it reads:

Mutual information is symmetrical with respect to its two arguments. If

X and

Y are stationary processes, the mutual information

depends only on

, the time difference between

and

.

Auto-mutual information:

If

and

, the MI measures the information shared by two consecutive chunks of the same process

X, both sampled at

. This quantity is sometimes called “information storage” [

33,

46,

47], and we refer to it as the auto-mutual information (AMI) of the process

X:

Remarking that the concatenation of

and

is nothing but the

-dimensional embedded vector:

the AMI depends on the embedding dimensions

and the sampling time

only. AMI of order

measures the shared information between consecutive

m-points and

p-points dynamics, i.e., by how much the uncertainty of future

p-points dynamics is reduced if the previous

m-points’ dynamics is known.

Thanks to the symmetry of the MI with respect to its two arguments and invoking the stationarity of

X, the AMI is invariant when exchanging

m and

p:

We emphasize that the MI, and therefore AMI, are defined only for the Shannon entropy. The expression of the Rényi order-

q mutual information is not unique as soon as

, and we do not consider it here.

Special case :

If

, the AMI is directly related to the Shannon entropy rate of order

m:

or equivalently:

Interestingly, this splits the entropy rate

into two contributions. The first one is the total entropy

of the process, which only depends on the one-point statistics and so does not describe the dynamics of

X. The second term is the AMI

, which depends on the dynamics of the process

X, irrespective of its variance [

48].

Special case of a process with Gaussian distribution:

For illustration, if

X is a stationary Gaussian process, hence fully defined by its variance

and normalized correlation function

, we have:

where

is the

correlation matrix of the process

X;

and

. For the particular case

, we have:

which clearly illustrates the decomposition of the entropy rate according to Equation (

11): the first term

depends only on the static (one-point) statistics (via

), and the second term

depends on the temporal dynamics (and in this simple case, only on the dynamics, via the auto-correlation function

).

3.2. Features from Complexity Theory

In the 1960s, Kolmogorov and Sinai adapted Shannon’s information theory to the study of dynamical systems. The divergence of trajectories starting from different, but undistinguishable initial conditions can be pictured as creating uncertainty, so creating information. Kolmogorov complexity (KC), also known as the Kolmogorov–Sinai entropy and denoted

in the following, measures the mean rate of creation of information by a dynamical system with ergodic probability measure

. KC is constructed exactly as the Shannon entropy rate from information theory, using Equation (

4) and the same functional form as in Equation (

2), but using the density

of trajectories in phase space instead of the probability density

p. In the early 1980s, the Eckmann–Ruelle entropy

[

29,

49] was introduced following the same steps, but using the functional form of the Rényi order-two entropy (Equation (

3)). The interest of

relies in its easier and hence faster computation from experimental time series, which was at the time a challenging issue.

Kolmogorov–Sinai and Eckmann–Ruelle entropies:

The ergodic theory of chaos provides a powerful framework to estimate the density of trajectories in the phase space of a chaotic dynamical system [

49]. For an experimental or numerical signal, it amounts to assimilating the phase space average to the time average. Given a distance

, usually defined with the

or the

norm, in the

m-dimensional embedded space, the local quantity:

provides, up to a factor

, an estimate of the local density

in the

m-dimensional phase space around the point

. The following averages:

are then used to provide the following equivalent definitions of the complexity measures [

49]:

3.2.1. Approximate Entropy

Approximate entropy (ApEn) was introduced by Pincus in 1991 for the analysis of babies’ heart rate [

50]. It is obtained by relaxing the definition (

21) of

and working with a fixed embedding dimension

m and a fixed box size

, often expressed in units of the standard deviation

of the signal as

. ApEn is defined as:

On the practical side, and in order to have a well-defined

in (

19), the counting of neighbors in the definition (

18) allows self-matches

. This ensures that

, which is required by (

19). ApEn depends on the number of points

N in the time series. Assuming

N is large enough, we have:

We interpret ApEn as an estimate of the

m-order Kolmogorov–Sinai entropy

at finite resolution

. The larger

N, the better the estimate. More interesting is that the non-vanishing value of

in its definition makes ApEn insensitive to details at scales lower than

. On the one hand, this is very interesting when considering an experimental (therefore noisy) signal: choosing

larger than the rms of the noise (if known) filters the noise, and ApEn is then expected to measure only the complexity of the underlying dynamics. This was the main motivation of Pincus and explains the success of ApEn. On the other hand, not taking the limits

and

makes ApEn an ill-defined quantity that has no reason to behave like

. In addition, only very few analytical results have been reported on the bias and the variance of ApEn.

Although

m should in theory be larger than the dimension of the underlying dynamical system, ApEN is defined and used for any possible value of

m, and most applications reported in the literature use small

m (1 or 2) without any analytical support, but with great success [

50,

51].

3.2.2. Sample Entropy

A decade after Pincus’s seminal paper, Richman and Moorman pointed out that ApEn contains in its very definition a source of bias and was lacking in some cases “relative consistency”. They defined sample entropy (SampEn) on the same grounds as ApEn:

So that:

On the practical side, the counting of neighbors in (

18) does not allow self-matches.

may vanish, but when averaging over all points in Equation (

20), the correlation integral

. In practice, SampEn is considered to improve on ApEn as it shows lower sensitivity to parameter tuning and data sample size than ApEn [

52,

53].

We interpret SampEn as an estimate of the m-order Eckmann–Ruelle entropy at finite resolution .

3.2.3. Estimation

We note by the following ApEn

and SampEn

the estimated values of ApEn and SampEn using our own MATLAB implementation, based on Physio-Net packages. We used the commonly-accepted value,

, with

the standard deviation of

X, and

. For all quantities, we used

with

Hz the cutoff frequency above which FHR times series essentially contain no relevant information [

13]; this time delay corresponds to

s.

3.3. Connecting Complexity Theory and Information Theory

We consider here for clarity only the relation between ApEn and m-order Shannon entropy rate, although the very same relation holds between SampEn and the m-order Rényi order-two entropy rate. In information theory terms, ApEn appears as a particular estimator of the m-order Shannon entropy rate that computes the probability density by counting, in the m-dimensional embedded space, the number of neighbors in a hypersphere of radius , which can be interpreted as a particular kernel estimation of the probability density.

3.3.1. Limit of Large Datasets and Vanishing : Exact Relation

When the size

of the spheres tends to 0, the expected value of ApEn for a stochastic signal

X with any smooth probability density is related, in the limit

, to the

m-order Shannon entropy rate [

28]:

Both terms involve

m-points correlations of the process

X. This relation allows a quantitative comparison of ApEn with the

m-order Shannon entropy rate

. The

difference corresponds to the paving, with hyperspheres of radius

, of the continuous

m-dimensional space over which the probability

involved in Equation (

2) is defined and, thus,

. This paving defines a discrete phase space, over which Equations (

18), (

19) and (

23) operate to define ApEn [

54]. This illustrates that, for a stochastic signal, ApEn diverges logarithmically as the size

approaches 0, as expected for

. Fortunately,

is fixed in the definition of ApEn, which allows one in practice to compute it for any signal/process.

3.3.2. New Features

Having recognized the success of ApEn and remembering its relation to

, it seems interesting to probe other

m-order Shannon entropy rate estimators. A straightforward improvement would be to consider a smooth, e.g., Gaussian, kernel of width

instead of the step function used in (

18). We prefer to reverse the perspective and use a

k-nearest-neighbor (

k-NN) estimate. Instead of counting the number of neighbors in a sphere of size

, this approach searches for the size of the sphere that accommodates

k neighbors. In practice, we compute the entropy

H with the Kozachenko and Leonenko estimator [

30,

55], which we denote

. We compute the auto-mutual information

with the Kraskov et al. estimator [

56], which we denote

. We then combine the two according to Equation (

11) to get an estimator

of the

m-order Shannon entropy rate. We use

neighbors and set

(see

Section 3.2.3).

We report in the next section our results for the five features when setting and compare their performances in detecting acidosis. The dependance of the m-order entropy rate (and its estimators) on m is expected to give some insight into the dimension of the attractor of the underlying dynamical system, but as we have pointed out, the dynamics is indeed contained in the AMI part of the entropy rate. This is why we further explore the effect of varying the embedding dimensions m and p on the AMI estimator .

5. Discussion, Conclusions and Perspectives

We now discuss the interpretation of Shannon entropy and AMI measurements in different stages of labor. The fetuses are classified as normal or acidotic depending on a post-delivery pH measurement, which gives a diagnosis of acidosis at delivery only. There is no information on the health of the fetuses during labor.

The physiological interpretation of a feature, and especially its relation to specific FHR patterns, e.g., like those detailed in [

2,

58], is a difficult task that is only scarcely reported in the literature [

59,

60]. In this article, we have averaged our results over large numbers of (normal or acidotic) subjects, which jeopardizes any precise interpretation in terms of a specific FHR pattern that may appear only intermittently.

5.1. Acidosis Detection in the First Stage

We can nevertheless suggest that the value of the Shannon entropy

H is related to the frequency of decelerations in the FHR signals. Indeed, Shannon entropy strongly depends on the standard deviation of the signal (e.g., see Equation (

15)), which in turn depends on the variability in the observation window. A larger number of decelerations in the observation window deforms the PDF of the FHR signal by increasing its lower tail; in particular, this increases the width of the PDF and hence increases the standard deviation and the Shannon entropy. This explains our findings in

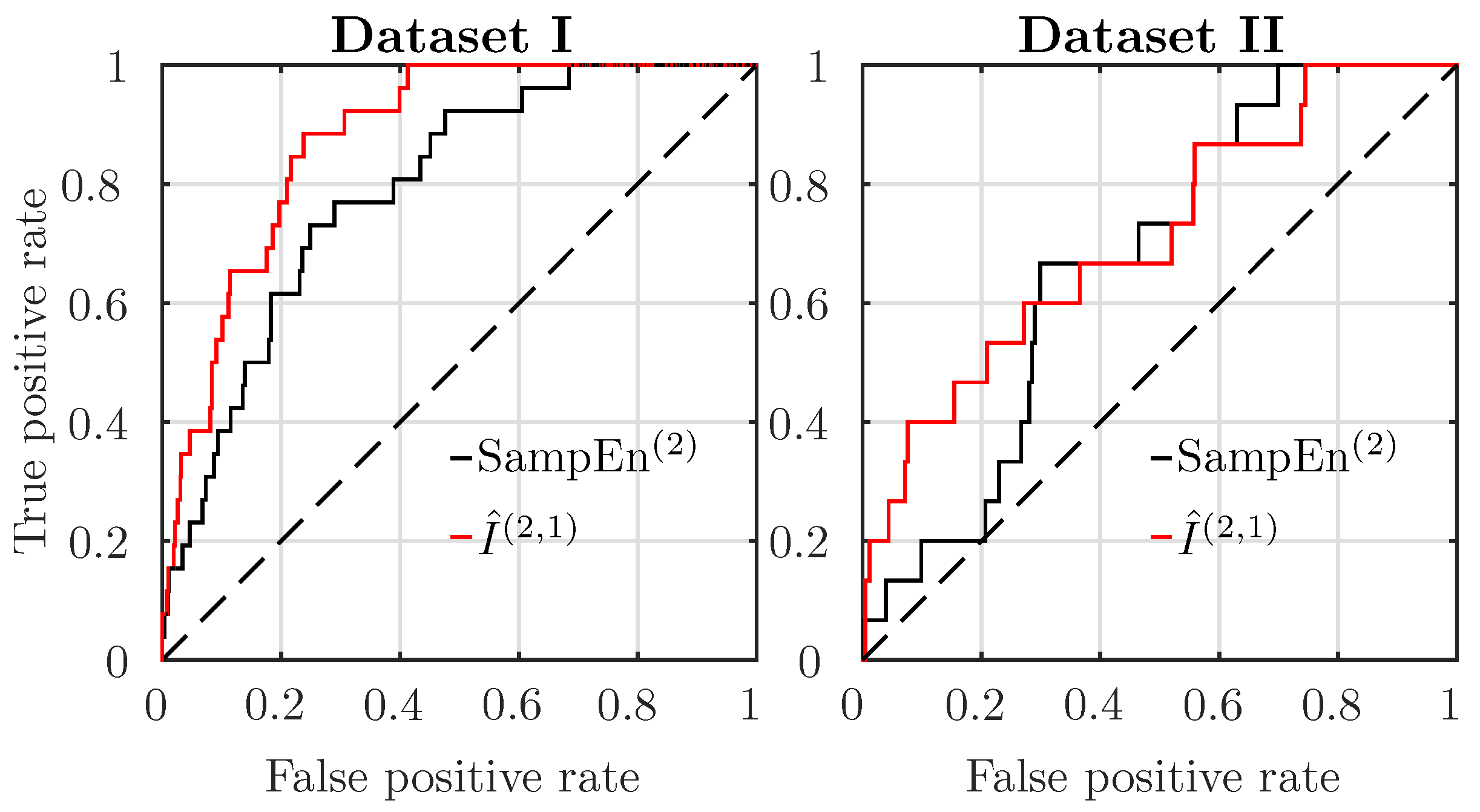

Figure 1 (for Dataset I).

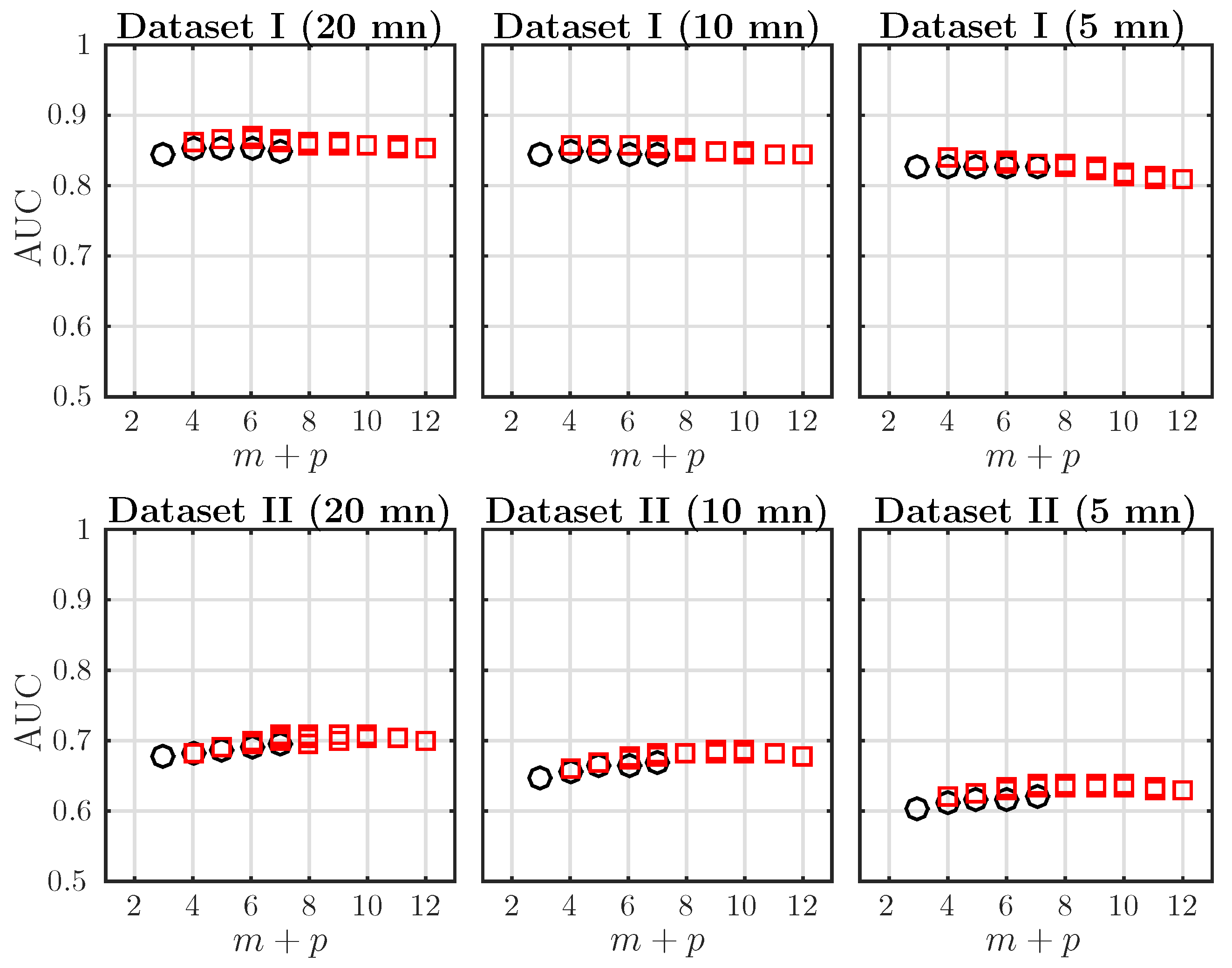

When acidosis develops in the first stage of labor, the Shannon auto-mutual information estimator significantly outperforms all other quantities both in terms of p-value and AUC. The performance of AMI is robust when tuning either the size of the observation, and hence the number of points in the data, and the embedding dimensions . In addition, the performance slightly increases when the total embedding dimension increases; although one has to care about the curse of dimensionality.

For abnormal subjects from Dataset II, AMI is not able to detect acidosis using data from Stage 1. This suggests that acidosis develops later, in the second stage of labor.

For all datasets, AMI computed with

s is always larger for acidotic subjects than for normal subjects. This is in agreement with results obtained with ApEn and SampEn, which are both lower for acidotic subjects. This shows that FHR classified as abnormal have a stronger dependence structure at a small scale than normal ones. We can relate this increase of the dependence structure of acidotic FHR to the short-term variability and to its coupling with particular large-scale patterns. For example, a sinusoidal FHR pattern [

2], especially if its duration is long, should give a larger value of the AMI, because its large-scale dynamics is highly predictable. As another example, we expect variable decelerations (with an asymmetrical V-shape) and late decelerations (with a symmetrical U-shape and/or reduced variability) to impact AMI differently. Of course, the choice of the embedding parameter

is then crucial, and this is currently under investigation.

AMI and entropy rates depend on the dynamics as they operate on time-embedded vectors. AMI focuses on nonlinear temporal dynamics, while being insensitive to the dominant static information. AMI is independent of the standard deviation, which on the contrary contributes strongly to the Shannon entropy. This explains why AMI performs better than entropy rate estimates, such as ApEn, SampEn and , which depend also on the standard deviation.

5.2. Acidosis Detection in Second Stage

The results reported for Stage 2 show a severe decrease in the performance of the five estimated quantities. Analyzing Stage 2 is far more challenging than analyzing Stage 1, which suggests that temporal dynamics in Stage 2 differ notably from those of Stage 1 [

38] or simply that our database does not contain enough acidotic subjects in that case.

achieves the best performance in terms of

p-value and AUC; this clearly underlines that the analysis of nonlinear temporal dynamics is critical for fetal acidosis detection in Stage 2. As in Stage 1, the AMI is always larger in Stage 2 for acidotic subjects than for normal subjects.

Although the Shannon entropy computed from the last 20 mn of Stage 2 before delivery does not show a clear tendency in

Figure 1 for Dataset II, looking at

Figure 6 clearly shows that

increases as labor progresses: this is probably related to the average increase of the number of decelerations, which is expected in both the normal and acidotic population.

SampEn

is also able to perform discrimination in Stage 2. From these observations, one may envision the definition of a new estimator that would measure the auto-mutual information using the Rényi order-two entropy by applying Equation (

12). Nevertheless, it should be emphasized that Rényi order-

q entropy is lacking the chain rule of conditional probabilities as soon as

, which may jeopardize any practical use of such an estimator.

5.3. Probing the Dynamics

Increasing the total embedding dimensions in AMI improves the performance in the detection of acidotic subjects, in both the first and second stages. The best performance is found for different total embedding dimension in the two datasets. This suggests that FHR dynamics is different in each stage.

As seen in Equation (

11), the Shannon entropy rate can be split into two contributions: one that depends only on static properties (the Shannon entropy, estimated by

) and one that involves the signal dynamics (the auto-mutual information, estimated by

). By following the time evolution of these two parts, we were able to relate Shannon entropy

with the evolution of the labor and AMI not only with the evolution of the labor, but also with possible acidosis. Looking at subjects for which the pushing phase is longer than 15 mn, it clearly appears that all fetuses are affected by the pushing, as evidenced by a large increase of the Shannon entropy

and a small increase of AMI. Additionally, the increase of AMI is steeper for abnormal subjects than for normal ones, which may indicate different reactions to the pushing and can be related to specific pathological FHR patterns. When the pushing stage is long (Dataset II), fetuses reported as acidotic do not show any sign of acidosis until prolonged pushing. These fetuses appear as normal until delivery is near.

When acidosis develops during the first stage of labor, in Dataset I, we observe clearly that while AMI increases steadily till delivery for healthy fetuses, it increases faster for acidotic ones. This suggests that acidotic fetuses in Dataset I react to early labor, as early as one hour before pushing starts. This could not only indicate that some fetuses are prone to acidosis, but also may pave the way for an early detection of acidosis in this case.