Sparse Coding Algorithm with Negentropy and Weighted ℓ1-Norm for Signal Reconstruction

Abstract

:1. Introduction

2. Materials and Methods

2.1. Least Absolute Shrinkage and Selection Operator

2.2. Proposed Minimization Formulation

2.3 Algorithm

2.3.1. Negentropy Maximization

2.3.2. Weighted -norm and FOBOS

| Algorithm 1: Proposed algorithm for sparse signal reconstruction with negentropy and weighted -norm |

| Task: Estimate the sparse signal by minimizing Initialization: input signal matrix , system noise and proper and . Initialize and . Main iteration:

|

3. Results and Discussions

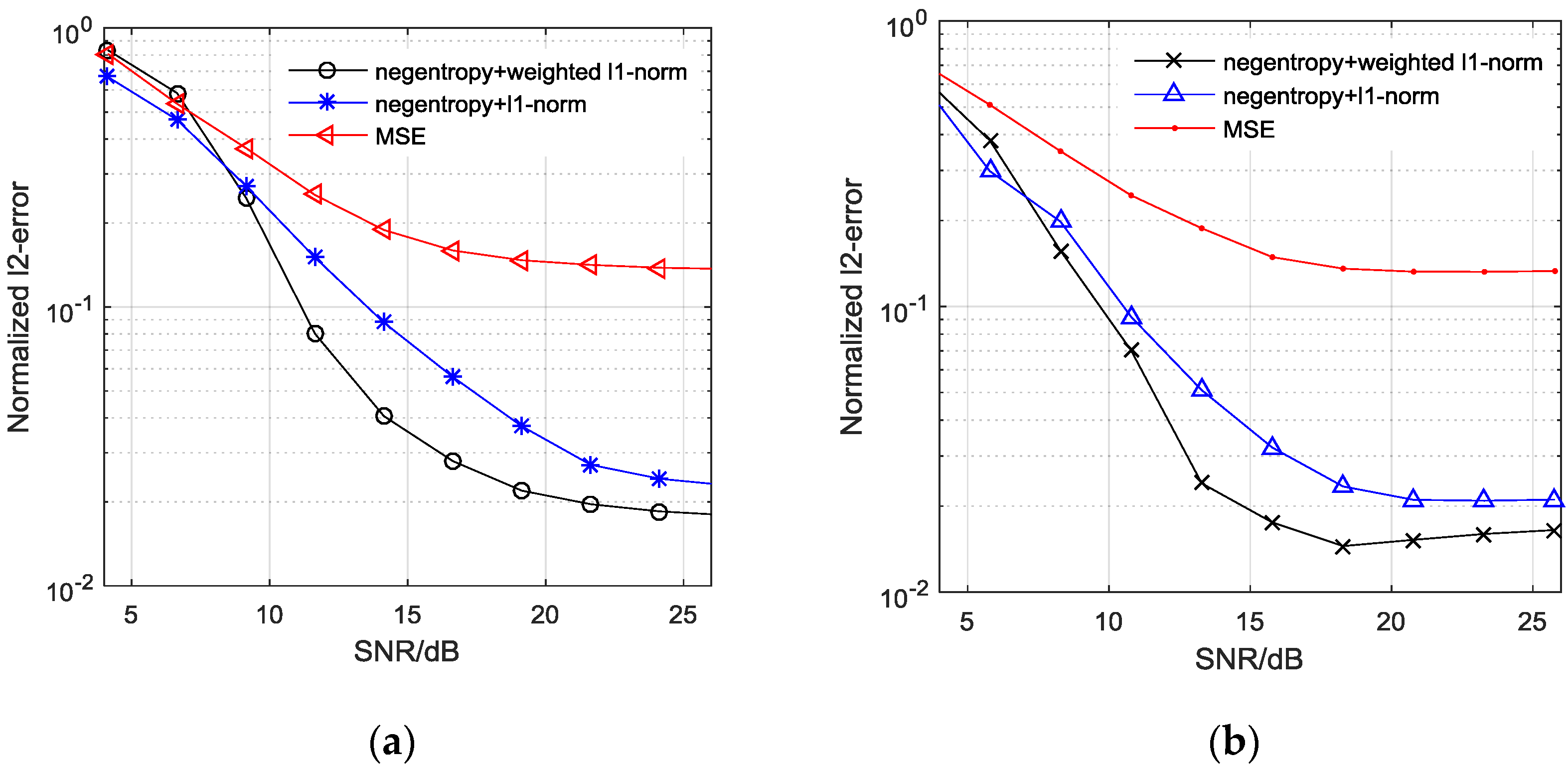

3.1. The Reconstructed Signal Comparison between MSE Criterion and Proposed Algorithms

3.2. The Accuracy Performance Comparison of the Algorithms

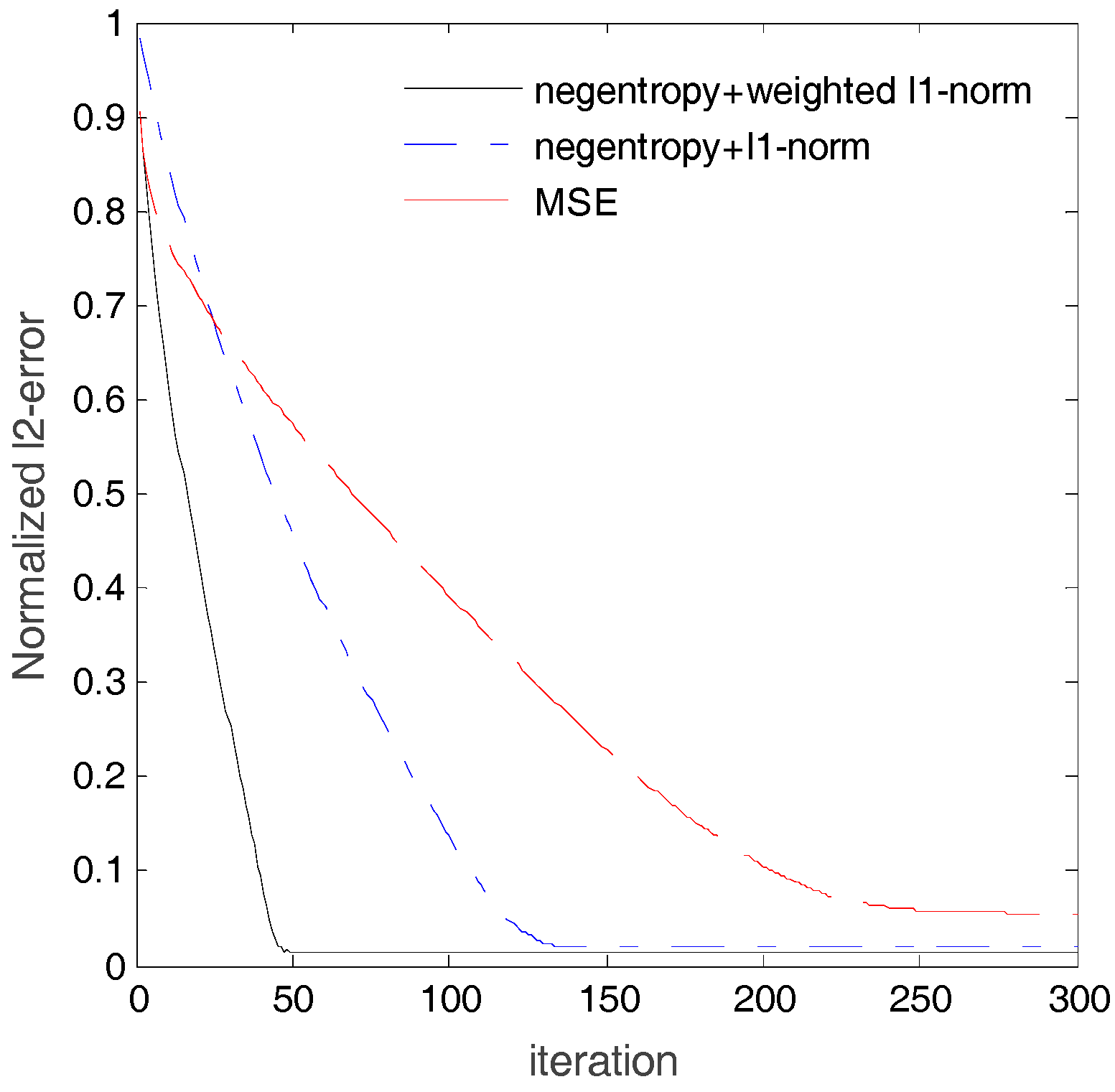

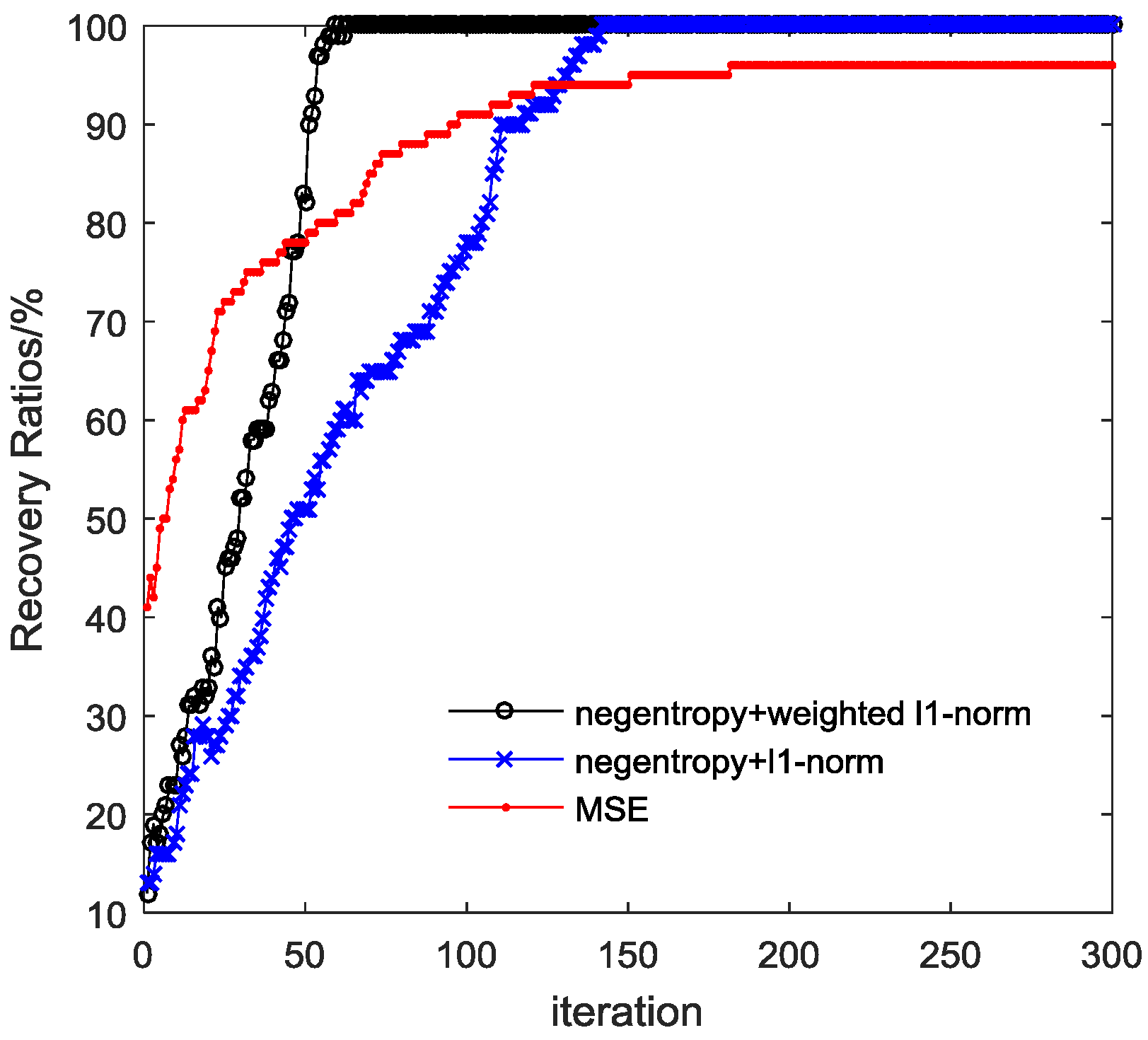

3.3. The Convergence Performance Comparison of the Algorithms

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Elad, M.; Figueiredo, M.; Ma, Y. On the role of sparse and redundant representations in image processing. Proc. IEEE 2010, 98, 972–982. [Google Scholar] [CrossRef]

- Li, B.; Du, R.; Kang, W.J.; Liu, G.L. Multi-user detection for sporadic IDMA transmission based on compressed sensing. Entropy 2017, 19, 334. [Google Scholar]

- Shi, W.T.; Huang, J.G.; Zhang, Q.F.; Zheng, J.M. DOA estimation in monostatic MIMO array based on sparse signal reconstruction. In Proceedings of the IEEE International Conference on Signal Processing, Communications and Computing, Hong Kong, China, 5–8 August 2016; pp. 1–5. [Google Scholar]

- Chen, Y.; Gu, Y.; Hero, A.O. Sparse LMS for system identification. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; pp. 3125–3128. [Google Scholar]

- Singer, A.C.; Nelson, J.K.; Kozat, S.S. Signal processing for underwater acoustic communications. IEEE Commun. Mag. 2009, 47, 90–96. [Google Scholar] [CrossRef]

- Yousef, N.R.; Sayed, A.H.; Khajehnouri, N. Detection of fading overlapping multipath components. Signal Process. 2006, 86, 2407–2425. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2004, 52, 489–509. [Google Scholar] [CrossRef]

- Xu, W.; Lin, J.; Niu, K.; He, Z. A joint recovery algorithm for distributed compressed sensing. IEEE Trans. Emerg. Telecommun. Technol. 2012, 23, 550–559. [Google Scholar] [CrossRef]

- Figueiredo, M.; Nowak, R.S.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 2007, 1, 586–598. [Google Scholar] [CrossRef]

- Kim, S.; Koh, K.; Lustig, M.; Boyd, S.; Gorinvesky, D. An interior point method for large-scale l1-regularized least squares. IEEE J. Sel. Top. Signal Process. 2007, 1, 606–617. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.; Figueiredo, M. A new TwIST: Two step iterative shrinkage-thresholding algorithms for image restoration. IEEE Trans. Image Process. 2007, 16, 2992–3004. [Google Scholar] [CrossRef] [PubMed]

- Bredies, K.; Lorenz, D.A. Iterated hard shrinkage for minimization problems with sparsity constraints. SIAM J. Sci. Comput. 2008, 30, 657–683. [Google Scholar] [CrossRef]

- Montefusco, L.B.; Lazzaro, D.; Papi, S. A fast algorithm for nonconvex approaches to sparse recovery problems. Signal Process. 2013, 93, 2636–2647. [Google Scholar] [CrossRef]

- Montefusco, L.; Lazzaro, D.; Papi, S. Fast sparse image reconstruction using adaptive nonlinear filtering. IEEE Trans. Image Process. 2011, 20, 534–544. [Google Scholar] [CrossRef] [PubMed]

- Leung, S.H.; So, C.F. Gradient-based variable forgetting factor RLS algorithm in time-varying environments. IEEE Trans. Signal Process. 2005, 53, 3141–3150. [Google Scholar] [CrossRef]

- Eksioglu, E.M. RLS algorithm with convex regularization. IEEE Signal Process. Lett. 2011, 18, 470–473. [Google Scholar] [CrossRef]

- Liu, J.M.; Grant, S.L. Proportionate Adaptive Filtering for Block-Sparse System Identification. IEEE Trans. Audio Speech Lang. Process. 2016, 24, 623–630. [Google Scholar] [CrossRef]

- Zhang, Y.W.; Xiao, S.; Huang, D.F.; Sun, D.J.; Liu, L.; Cui, H.Y. l0-norm penalised shrinkage linear and widely linear LMS algorithms for sparse system identification. IET Signal Process. 2017, 11, 86–94. [Google Scholar] [CrossRef]

- Wu, F.Y.; Tong, F. Non-Uniform Norm Constraint LMS Algorithm for Sparse System Identification. IEEE Commun. Lett. 2013, 17, 385–388. [Google Scholar] [CrossRef]

- Magill, D. Adaptive minmum MSE estimation. IEEE Trans. Inf. Theory 1963, 9, 289. [Google Scholar] [CrossRef]

- Woodward, P. Entropy and negentropy. IRE Trans. Inf. Theory 1957, 3, 3. [Google Scholar] [CrossRef]

- Yamamoto, T.; Yamagishi, M.; Yamada, I. Adaptive Proximal Forward-Backward Splitting for sparse system identification under impulsive noise. In Proceedings of the 20th European Signal Processing Conference, Bucharest, Romania, 27–31 August 2012; pp. 2620–2624. [Google Scholar]

- Theodoridis, S.; Kopsinis, Y.; Slavakis, K. Sparsity-Aware Learning and Compressed Sensing: An Overview. Inf. Theory 2012, 11, 7–12. [Google Scholar]

- Stefan, V.; Isidora, S.; Milos, D.; Ljubisa, S. Comparison of a gradient-based and LASSO algorithm for sparse signal reconstruction. In Proceedings of the 5th Mediterranean Conference on Embedded Computing, Bar, Montenegro, 12–16 June 2016; pp. 377–380. [Google Scholar]

- Su, G.; Jin, J.; Gu, Y.; Wang, J. Performance analysis of l0-norm constraint least mean square algorithm. IEEE Trans. Signal Process. 2012, 60, 2223–2235. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Liu, Z.; Wang, Y.; Wu, H.; Ding, S. Sparse Coding Algorithm with Negentropy and Weighted ℓ1-Norm for Signal Reconstruction. Entropy 2017, 19, 599. https://doi.org/10.3390/e19110599

Zhao Y, Liu Z, Wang Y, Wu H, Ding S. Sparse Coding Algorithm with Negentropy and Weighted ℓ1-Norm for Signal Reconstruction. Entropy. 2017; 19(11):599. https://doi.org/10.3390/e19110599

Chicago/Turabian StyleZhao, Yingxin, Zhiyang Liu, Yuanyuan Wang, Hong Wu, and Shuxue Ding. 2017. "Sparse Coding Algorithm with Negentropy and Weighted ℓ1-Norm for Signal Reconstruction" Entropy 19, no. 11: 599. https://doi.org/10.3390/e19110599