1. Preface: Information Integration and Complexity

Since the publication of Shannon’s pioneering work in 1948 [

1], it has been hypothesized that his information theory provides means for understanding information processing and learning in the brain. Already in the 1950s, the principle of redundancy reduction has been proposed independently by Attneave [

2] and Barlow [

3]. In 1981, Laughlin has provided some experimental evidence for the redundancy reduction principle in terms of the maximization of the output entropy of large monopolar cells of the fly’s compound eye [

4]. As only deterministic response functions have been considered, this principle turns out to be equivalent to the mutual information maximization between the input and the output. Later, Linsker [

5] has demonstrated that the maximization of mutual information in a layered feed-forward network leads to feature detectors that are similar to those observed by Hubel and Wiesel in the visual system of the cat and the monkey [

6,

7]. He coined his information-theoretic principle of learning the

infomax principle.

The idea that an information-theoretic principle, such as the infomax principle, governs learning processes of neuronal systems has attracted many researchers. A highly recognized contribution in this regard is the work by Bell and Sejnowski [

8] which applies the infomax principle to the source separation problem. An exhaustive review of all relevant contributions to that field is not within the scope of this short discussion. I shall focus on approaches that aim at relating such information based principles to the overall complexity of the system. In particular, I shall concentrate on the theory of information integration and complexity, initially proposed by Tononi, Sporns, and Edelman [

9], and further developed and analyzed in a series of papers [

10–

15]. I shall compare this line of research with my own information-geometric approach to complexity, initially proposed in my manuscript [

16], entitled

Information Geometry on Complexity and Stochastic Interaction, which led to various lines of research that I am going to outline below. This manuscript constitutes the main body of the present paper, starting with Section 2. It quantifies complexity as the extent to which the whole is more than the sum of its parts using information geometry [

17]. Thereby, it extends the notion of multi-information [

18,

19], also called information integration in [

9], to the setting of discrete time stochastic processes, in particular Markov chains. This article was originally accepted for publication in IEEE Transactions on Information Theory, subject to minor revision. However, by the end of the unusually long reviewing process I had come to the conclusion that my geometric approach has to be further improved in order to address important aspects of complexity (I shall be more concrete on that). Recent developments, on the other hand, suggest that this work is of relevance in the context of information integration already in its present form [

12–

15,

20,

21]. Therefore, it should be useful to provide it together with a discussion of its strengths and shortcomings, thereby relating it to similar work that has been developed since its first publication.

Let us first consider the so-called

multi-information [

18,

19] of a random vector

X = (

Xv)

v∊V, taking values in a finite set:

where

H denotes the Shannon entropy (we assume

V to be a non-empty and finite set). The multi-information vanishes if and only if the variables

Xv, v ∊ V, are stochastically independent. In their original paper [

9], Tononi, Sporns, and Edelman call this quantity

integration. Following their intuition, however, the notion of integration should rather refer to a dynamical process, the process of integration, which is causal in nature. In later works, the dynamical aspects have been more explicitly addressed in terms of a causal version of mutual information, leading to improved notions of

effective information and

information integration, denoted by Φ [

10,

11]. In fact, most formulated information-theoretic principles are, in some way or another, based on (conditional) mutual information. This directly fits into Shannon’s classical sender-receiver picture [

1], where the mutual information has been used in order to quantify the capacity of a communication channel. At first sight, this picture suggests to treat only feed-forward networks, in which information is transmitted from one layer to the next, as in the context of Linsker’s infomax principle. In order to overcome this apparent restriction, however, we can simply unfold the dynamics in time and consider corresponding temporal information flow measures, which allows us to treat also recurrent networks. In what follows, I am going to explain this idea in more detail, thereby providing a motivation of the quantities that are derived in Section 2 in terms of information geometry.

We consider again a non-empty and finite set

V of nodes and assume that each

v ∊ V receives signals from a set of nodes which we call

parents of

v and denote by

pa(

v). Based on the received signals, the node

v updates its state according to a Markov kernel

K(v), the mechanism of

v, which quantifies the conditional probability of its new state

given the current state

ωpa(v) of its parents. If

v ∊ pa(

v), this update will involve also

ωv for generating the new state

. How much information is involved from “outside”, that is from

∂(

v) :=

pa(

v)

\ v, in addition to the information given by

ωv? We can define the

local information flow from this set as

where

MI stands for the (conditional) mutual information. Note that this is the uncertainty reduction that the node

v gains through the knowledge of its parents’ state, in addition to its own state. Now let us define the total information flow in the network. In order to do so, we have to consider the overall transition kernel. Because the nodes update their states in parallel, the global transition kernel is given as

In order to quantify the

total information flow in the network, we simply add all the local information flows, defined by

Equation (2), and obtain

It is easy to see that the total information flow vanishes whenever the global transition kernel has the following structure which encodes the dynamics of isolated non-communicating nodes:

Referring to these kernels as being

split, we are now ready to give our network information flow measure, defined by

Equation (4), a geometric interpretation. If

K has the structure

Equation (3) then

Here,

Dp(

K || K′) is a measure of “distance”, in terms of the Kullback-Leibler divergence, between

K and

K′ with respect to the distribution

p (see definition by

Equation (23)). The expression on the right-hand side of

Equation (6) can be considered as an extension of the multi-information

(1) to the temporal domain. The second equality,

Equation (7), gives the total information flow in the network a geometric interpretation as the distance of the global dynamics

K from the set of split dynamics. Stated differently, the total information flow can be seen as the extent to which the whole transition

X → X′ is more than the sum of its individual transitions

,

v ∊ V. Note, however, that

Equation (6) follows from the additional structure

(3) which implies

. This structure encodes the consistency of the dynamics with the network.

Equation (7), on the other hand, holds for any transition kernel

K. Therefore, without reference to a particular network, the distance min

K′ split Dp(

K || K′) can be considered as a complexity measure for any transition

X → X′, which we denote by

C(1)(

X → X′). The information-geometric derivation of

C(1)(

X → X′) is given in Section 2.4.1. Restricted to kernels that are consistent with a network, the complexity

C(1)(

X → X′) reduces to the total information flow in the network (see Proposition 2 (iv)).

In order to consider the maximization of the complexity measure

C(1)(

X → X′) as a valid information-theoretic principle of learning in neuronal systems, I analyzed the natural gradient field on the manifold of kernels that have the structure given by

Equation (3) (see [

17,

22] for the natural gradient method within information geometry). In [

23] I proved the consistency of this gradient in the sense that it is completely local: If every node

v maximizes its own local information flow, defined by

Equation (2), in terms of the natural gradient, then this will be the best way, again with respect to the natural gradient, to maximize the complexity of the whole system. This suggests that the infomax principle by Linsker and also Laughlin’s ansatz, applied locally to recurrent networks, will actually lead to the maximization of the overall complexity. We used geometric methods to study the maximizers of this complexity analytically [

24,

25]. We have shown that they are almost deterministic, which has quite interesting implications, for instance for the design of learning systems that are parametrized in a way that allows them to maximize their complexity [

26] (see also [

27] for an overview of geometric methods for systems design). Furthermore, evidence has been provided in [

25] that the maximization of

C(1)(

X → X′) is achieved in terms of a rule that mimics the spike-timing-dependent plasticity of neurons in the context of discrete time. Together with Wennekers, we have studied complexity maximization as first principle of learning in neural networks also in [

28–

33].

Even though I implicitly assumed that a natural notion of information flow has to reflect the causal interactions of the nodes, I should point out that the above definition of information flow has a shortcoming in this regard. If

Xv and

X∂(v) contain the same information, due to a strong stochastic dependence, then the conditional mutual information in

Equation (2) will vanish, even though there might be a strong causal effect of

∂(

v) on

v. Thus, correlation among various potential causes can hide the actual causal information flow. The information flow measure of

Equation (2) is one instance of the so-called transfer entropy [

34] which is used within the context of Granger causality and has, as a conditional mutual information, the mentioned shortcoming also in more general settings (see a more detailed discussion in [

35]). In order to overcome these limitations of the (conditional) mutual information, in a series of papers [

35–

39] we have proposed the use of information theory in combination with Pearl’s theory of causation [

40]. Our approach has been discussed in [

41] where a variant of our notion of node exclusion, introduced in [

36], has been utilized for an alternative definition. This definition, however, is restricted to direct causal effects and does not capture, in contrast to [

35], mediated causal effects.

Let us now draw a parallel to causality issues of the complexity measure introduced in the original work [

9], which we refer to as

TSE-complexity. In order to do so, consider the following representation of the original TSE-complexity as weighted sum of mutual informations:

where

. Interpreting the mutual information between

A and its complement

V\A in this sum as an information flow is clearly misleading. These terms are completely associational and neglect the causal nature of information flow. In [

10,

11], Tononi and Sporns avoid such inconsistencies by injecting noise (maximum entropy distribution) into

A and then measuring the effect in

V \ A. They use the corresponding interventional mutual information in order to define

effective information. Note that, although their notion of noise injection is conceptually similar to the notion of intervention proposed by Pearl, they formalize it differently. However, the idea of considering a post-interventional mutual information is similar to the one formalized in [

35,

36] using Pearl’s interventional calculus.

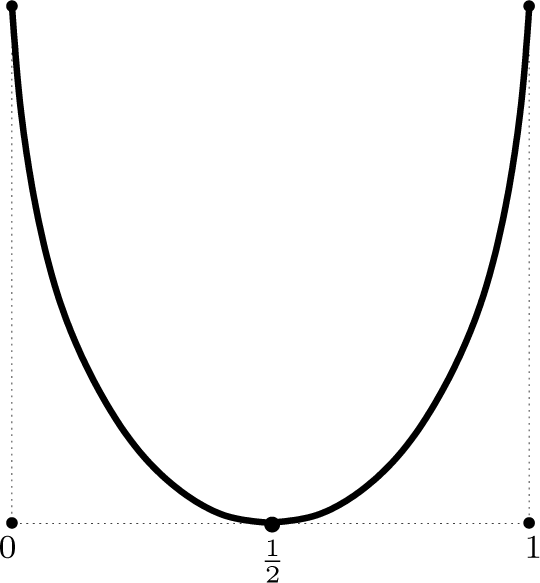

Clearly, the measure

C(1)(

X → X′) does not account for all aspects of the system’s complexity. One obvious reason for that can be seen by comparison with the multi-information, defined by

Equation (1), which also captures some aspects of complexity in the sense that it quantifies the extent to which the whole is more than the sum of its elements (parts of size one). On the other hand, it attains its (globally) maximal value, if and only if the nodes are completely correlated. Such systems, in particular completely synchronized systems, are generally not considered to be complex. Furthermore, it turns out that these maximizers are determined by the marginals of size two [

42]. Stated differently, the maximization of the extent to which the whole is more than the sum of its parts of size one leads to systems that are not more than the sum of their parts of size two (see for a more detailed discussion [

43,

44]). Therefore, the multi-information does not capture the complexity of a distribution at all levels. The measure

C(1)(

X → X′) has the same shortcoming as the multi-information. In order to study different levels of complexity, one can consider coarse-grainings of the system at different scales in terms of corresponding partitions Π = {

S1,

…, Sn} of

V. Given such a partition, we can define the information flows among its atoms

Si as we already did for the individual elements

v of

V. For each

Si, we denote the set of nodes that provide information to

Si from outside by

. We quantify the information flow into

Si as in

Equation (2):

For a transition that satisfies

Equation (3), the total information flow among the parts

Si is then given by

We can now define the Π-

complexity of a general transition, as we already did for the complete partition:

Obviously, the Π-

complexity coincides with the information flow

IF (

X → X′ | Π) in the case where the transition kernel is compatible with the network. The information-geometric derivation of

C(

X → X′ | Π) is given in Section 2.4.1. In the early work [

10,

11], a similar approach has been proposed where only bipartitions have been considered. Later, an extension to arbitrary partitions has been proposed by Balduzzi and Tononi [

12,

13] where the complexity defined by

Equation (11) appears as measure of effective information. Note, however, that there are important differences. First, the proposed measure by Tononi and his coworkers is reversed in time, so that their quantity is given by

Equation (11) where

X and

X′ have exchanged roles. This time-reversal of the effective information is motivated by its intended role as a measure relevant to conscious experience. This does not make any difference in the case where a stationary distribution is chosen as input distribution. However, in order to be consistent with causal aspects of conscious experience, the authors choose a uniform input distribution, which models the least informative prior about the input.

Note that there is also a closely related measure, referred to as

synergistic information in the works [

15,

45]:

The last equation directly follows from Proposition 1 (iii) (see the derivation of

Equation (29)). Interpreting the mutual informations as (one-step) predictive information [

46–

48], the synergistic information quantifies the extent to which the predictive information of the whole system exceeds the sum of predictive informations of the elements.

Now, having for each partition of the system the corresponding Π-complexity of

Equation (11), how should one choose among all these complexities the right one? Following the proposal made in [

10–

13], one should identify the partition (or bipartition) that has the smallest, appropriately normalized, Π-complexity. Although the overall complexity is not explicitly defined in these works, the notion of

information integration, denoted by Φ, seems to directly correspond to it. This is confirmed by the fact that information integration is used for the identification of so-called

complexes in the system. Loosely speaking, these are defined to be subsets

S of

V with maximal information integration Φ(

S). This suggests that the authors equate information integration with complexity. In a further refinement [

12,

13] of the information integration concept, this is made even more explicit. In [

13], Tononi writes: “In short, integrated information captures the information generated by causal interactions in the whole, over and above the information generated by the parts.”

Defining the overall complexity simply as the minimal one, with respect to all partitions, will ensure that a complex system has a considerably high complexity at

all levels. I refer to this choice as the

weakest link approach. This is not the only approch to obtain an overall complexty measure from individual ones defined for various levels. In order to give an instructive example for an alternative approach, let us highlight another representation of the TSE-complexity. Instead of the atoms of a partition, this time we consider the subsets of

V with a given size

k ∊ {1,

…, N} and define the following quantity:

Let us compare this quantity with the multi-information of

Equation (1). For

k = 1, they are identical. While the multi-information quantifies the extent to which the whole is more than the sum of its elements (subsets of size one), its generalization

C(k)(

X) can be interpreted as the extent to which the whole is more than the sum of its parts of size

k. Now, defining the overall complexity as the minimal

C(k)(

X) would correspond to the weakest link approach which I discussed above in the context of partitions. A complex system would then have considerably high complexity

C(k)(

X) at all levels

k. However, the TSE-complexity is not constructed according to the weakest link approach, but can be written as a weighted sum of the terms

C(k)(

X):

where

. The right choice of the weights is important here. I refer to this approach as the

average approach. Clearly, one can interpolate between the weakest link approach and the average approach using the standard interpolation between the

L∞-norm (maximum) and the

L1-norm (average) in terms of the

Lp-norms,

p ≥ 1. However,

Lp-norms appear somewhat unnatural for entropic quantities.

The TSE-complexity has also an information-geometric counterpart which has been developed in a series of papers [

43,

44,

49,

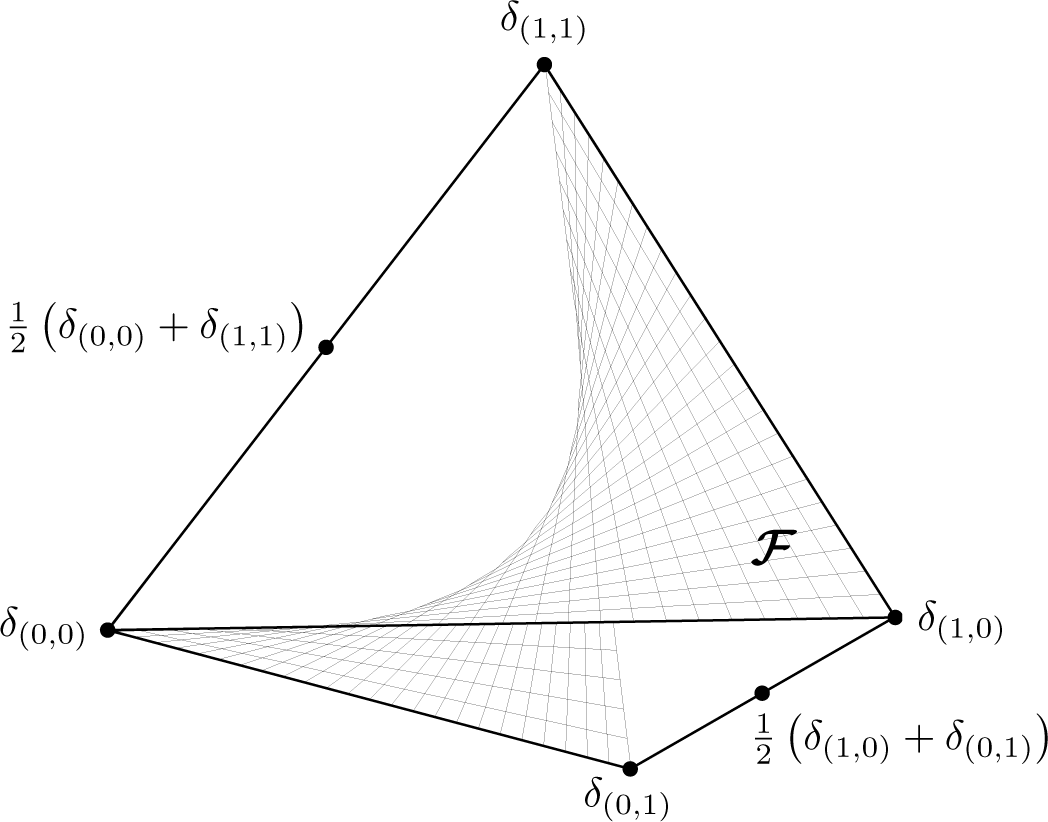

50]. It is instructive to consider this geometric reformulation of the TSE-complexity. For a distribution

p, let

p(k) be the maximum-entropy estimation of

p with fixed marginals of order

k. In particular,

p(N) =

p, and

p(1) is the product of the marginals

pv, v ∊ V, of order one. In some sense,

p(k) encodes the structure of

p that is contained only in the parts of size

k. The deviation of

p from

p(k) therefore corresponds to

C(k)(

X), as defined in

Equation (14). This correspondence can be made more explicit by writing this deviation in terms of a difference of entropies:

where

D denotes the Kullback-Leibler divergence. If we compare the

Equations (16) and

(14), then we see that

corresponds to

. Indeed, both terms quantify the entropy that is contained in the marginals of order

k. From the information-geometric point of view, however, the second term appears more natural. The first term seems to count marginal entropies multiple times so that we can expect that this mean value is larger than

. In [

43], we have shown that this is indeed true, which implies

If we replace the

C(k)(

X) in the definition

(15) of the TSE-complexity by

D(

p || p(k)), then we obtain with the Pythagorean theorem of information geometry the following quantity:

where

. Let us compare this with the multi-information. Following [

18], we can decompose the multi-information as

I already mentioned that high multi-information is achieved for strongly correlated systems, which implies that the global maximizers can be generated by systems that only have pairwise interactions [

42], that is

p =

p(2). It follows that in the above decompsition of

Equation (19), only the first term

D(

p(2) || p(1)) is positive while all the other terms vanish for maximizers of the multi-information. This suggests that the multi-information does not weight all contributions

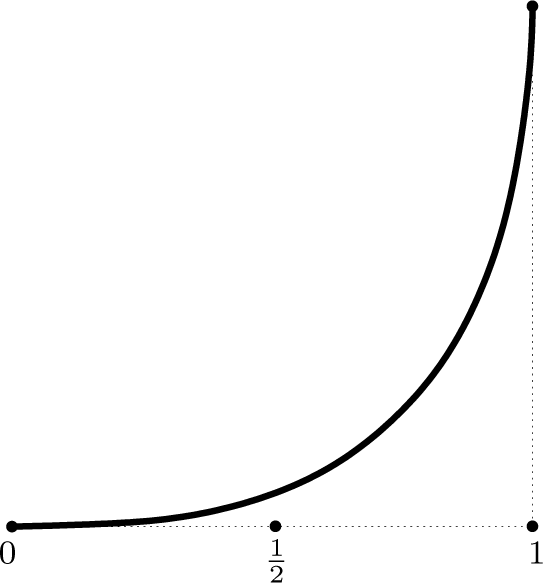

D(

p(k+1) || p(k)) to the stochastic dependence in a way that would qualify it as a complexity measure. The measure defined by

Equation (18), which I see as an information-geometric counterpart of the TSE-complexity, weights the higher-order contributions

D(

p(k+1) || p(k)),

k ≥ 2, more strongly. In this geometric picture, we can interpret the TSE-complexity as a rescaling of the multi-information in such a way that its maximization will emphasize not only pairwise interactions.

Concluding this preface, I compared two lines of research, the one pursued by Tononi and coworkers on information integration, and my own information-geometric research on complexity. The fact that both research lines independently identified closely related core concepts of complexity confirms that these concepts are quite natural. The comparison of the involved ideas suggests the following intuitive definition of complexity:

The complexity of a system is the extent to which the whole is more than the sum of its parts at all system levels. I argue that information geometry provides natural methods for casting this intuitive definition into a formal and quantitative theory of complexity. My paper [

16], included here as Section 2, exemplifies this way of thinking about complexity. It is presented with only minor changes compared to its initial publication, except that the original reference list is replaced by the largely extended up-to-date list of references. This implies repetitions of a few standard definitions which I already used in this preface.