Action-Amplitude Approach to Controlled Entropic Self-Organization

Abstract

: Motivated by the notion of perceptual error, as a core concept of the perceptual control theory, we propose an action-amplitude model for controlled entropic self-organization (CESO). We present several aspects of this development that illustrate its explanatory power: (i) a physical view of partition functions and path integrals, as well as entropy and phase transitions; (ii) a global view of functional compositions and commutative diagrams; (iii) a local geometric view of the Kähler–Ricci flow and time-evolution of entropic action; and (iv) a computational view using various path-integral approximations.1. Introduction

We are primarily interested in developing advanced models of military command and control (C2), which we formulate (in its simplest form) as follows. Our approach should also be applicable across a wide range of non-military organizational decision-making settings. Consider a group Γ := {Γi: i = 1, 2, …, n} of n agents, each characterized by its own intent, Ii, and the set of m capabilities {Cj: j = 1, 2, …, J}. The basic modelling construction of the problem is that, according to perceptual control theory (see [1,2]), the perceptual error of an agent, Γi, is the difference between its intent and the consequences of its choice of a capability, Cj:

Thus, under this construction, the optimal behavior, , of each agent, Γi, is given by minimizing the perceptual error, Ei:

so that the probability Pri = Pri(Xi = Cj) of a specific choice, Cj, is expected to be maximal when the perceptual error (2) is minimal.

- (1)

The following geometrical interpretations can be given to the optimization Problem (1) and (2): (1) The perceptual error, Ei, given by the simple absolute value (1) can be “promoted” to the Euclidean L2-norm:

or to the Euclidean metric:

- (2)

Its continuous generalization, allowing for a continuum of capability choices, is given by the Banach L2-norm:

and the associated Banach metric between any two real-valued square-integrable functions, I, A, defined on a real interval, [a, b] ⊂ ℝ:

For example, the finite control problem is actually a minimization of the square of the Banach metric (6). (We remark that in finite control (i.e., output tracking and navigation), we want the scalar system output, y(x, t), to follow a continuous nominal objective trajectory, say χ(t), for a given MD system vector, x, and where t is time. This problem can be expressed as:

Upon time discretization using a suitable quadrature scheme, this becomes the following least-squares problem:

Both discretized functions, and , include the weights of the chosen quadrature scheme.)

- (3)

The optimization Problem (2) can be rewritten in variational formulation as the stationary Hamilton action problem:

with the following particular cases:

From these cases, the set of discrete Euler–Lagrangian equations of motion on the group/graph, Γ, can be derived (see [3], as well as Section 3 below). Geometrically, this means that the perceptual error, in all three forms—the absolute value (1), the L2-norm (3)–(5), or the metric (4)–(6)—represents an energy landscape. The optimization problem is some kind of a gradient or Levenberg-Marquardt (LM) descent along the Lagrangian geodesics of this energy landscape. (Note that the LM-algorithm, sometimes also known as “damped least squares” (DLS), interpolates between the gradient descent and the Gauss-Newton algorithm for quick and efficient convergence, by properly adjusting the damping parameter. It is more robust than Gauss-Newton, meaning that it can often find an optimum even when initialized far from it.)

Next, we assume the probability Pri = Pri(Xi = Cj) of a specific choice, Cj, in the optimization Problem (1) and (2) to be a monotonically-decreasing function of the perceptual error, Ei. if we consider only a finite set of capabilities, a useful example of the probability distribution function (PDF) can be formulated as an exponentially-weighted collection of discrete Dirac-delta functions:

The PDF (7) can be easily generalized to the Gibbs measure of a random variable, Xi, having the set of corresponding coordinates, {xi}, defined by the PDF [4]:

where is the Hamiltonian energy function of the above energy landscape with local coordinates, {xi}, and β is a free parameter (in thermodynamics, β would be inverse temperature). The corresponding partition function (see, e.g., [5]):

provides the Gibbs measure on the system’s state-space, which is a unique statistical distribution that maximizes the entropy for a fixed expectation value of the energy:

The associated system’s order parameter, entropy, is given by:

Entropy describes both “ignorance”, or Heisenberg’s uncertainty, and “randomness”.

A useful particular example of Equation (8) is the partition function of a three-dimensional ensemble of n “molecules”, given by the 6n-dimensional phase-space integral:

where pi = pi(xi) are generalized momenta.

More generally, we consider Markov random fields/Markov networks, which have a Markov property described by an undirected graph (see [4]). In Markov networks with local vertex coordinates, {xi}, the PDF is usually given by the log-linear inner product model:

where fi = fi(xi) are the feature functions and wi are their corresponding weights (so that ∑ wifi is their dot-product), while Z is the partition function. When the PDF (10) is strictly positive, the Markov network is often called the Gibbs random field.

We remark here that Markov random fields have been introduced as a Markovian framework for the Ising spin-lattice model, defined by the Hamiltonian energy function (given here in its simplest dot-product form):

where σi ∈ {+1, −1} are discrete spin-up and spin-down states (or, more generally, Pauli spin matrices), while Jij is the interaction matrix with synaptic weights. The same Hamiltonian has also been used in Hopfield neural networks [6], in which case, σi represents the state of the McCulloch–Pitts neuron [7].

In this paper, we present several different views of an action-amplitude model for controlled entropic self-organization (CESO).

2. Physical View on CESO

2.1. From Partition Function to Feynman’s Path Integral

We have already seen from example Equation (9) that the number of random variables, Xi, need not be countable; if we continue in this direction, the set of corresponding coordinates, {xi}, becomes a field ϕ = ϕ (x) ∈ ℝn. Consequently, the sum in Equation (8) is replaced by the path integral (see, e.g., [8]):

where D[ϕ] represents the appropriate Lebesgue measure. (We remark that as any quantum system has both a continuous spectrum (consisting of eigenfunctions) and a discrete spectrum (consisting of eigenvectors), the path-integral symbol, ∫ D[ϕ], represents both the integration over the continuous spectrum and the summation over the discrete spectrum of the field system ϕ = ϕ (x) [8].)

More generally, in quantum field theory, instead of the field Hamiltonian, H(ϕ), we have the classical (Lagrangian or Hamiltonian) action, A(ϕ), of the theory. Both the real path integral in imaginary time (the so-called Euclidean path integral):

and the complex path integral in real time (the so-called Lorentzian path integral):

represent partition functions of the quantum field theory in ℝn. In both cases, quantum probability Pr(ϕ) is defined as the absolute square of the transition amplitude:

Finally, we generalize our quantum theory of fields, from ϕ ≡ ϕ (x) ∈ ℝn to Φ ≡ Φ(x) ∈ M, defined on an arbitrary nonlinear configuration, n-manifold M, with its curved geometry and topology with a certain number of holes. In this way, we arrive at our main conceptual tool, the geometrical/topological path integral, in its Euclidean and Lorentzian versions, respectively given by (see [3]):

Here, A[Φ] represents classical Lagrangian action, the integration is performed over all continuous paths + fields + geometries defined on the configuration manifold, M, while summation is performed along the edges of the corresponding discrete graph structure, obtained either by putting the tram-tracks-like constraints along the manifold, M, or by performing some form of triangulation discretization. (Note that, given any topological/smooth manifold, M, one can always obtain not one, but rather a family (or a set) of discrete network/graph structures on it, defined either by fixing some tram-like constraints of motion or by simplicial (or Regge-type) triangulation. In terms of dynamics, this means spatio-temporal discretization (e.g., from the standard continuous Laplacian (or Laplace–Beltrami) operator, △, defined on a manifold, M, one can obtain the discrete Laplacian, △d.))

2.2. Three-Phase Entropic Framework with Transition Amplitudes

Recall that Prigogine’s Extended Second Law of Thermodynamics [9]:

considers open (i.e., non-isolated) irreversible systems or processes that exchange energy and matter with their environment in such a way that the entropy change (or entropy variation) is given by the sum of the internal and external entropy changes:

where diS denotes the internal entropy production within the system, while the deS is the external entropy flux, due to the exchanges with the environment. For an isolated system, deS = 0.

By further extending Prigogine’s open Second Law (15) into the strict-control regime, we formulate a generic three-phase entropic framework for entropy, S, in any complex system described by one of the partition functions (11)–(14). The framework consists of the following components:

- (1)

The phase of Intent, given by the monotonically increasing entropy: ∂tS > 0;

- (2)

The phase of Action, corresponding to the conservation of information, described by the conserved entropy: ∂tS = 0;

- (3)

The phase of Control, described by the monotonically decreasing entropy: ∂tS < 0.

The phase transition from one phase to another, caused by the system’s topology change (see [8]), is described by the transition amplitude:

where “ ” denotes both continuous topology change “ ” of the system’s configuration manifold, M, and discrete topology-change “ ” of the system’s configuration graph/network structure (e.g., removing or adding vertices of the graph).

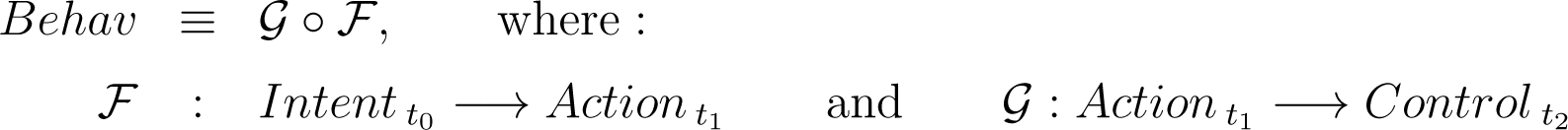

3. Global Functional View on CESO

The set—or, more appropriately, the category—of generic agents’ behaviors, Behav, is defined by the functional composition of the following two successive multidimensional maps:

such that the following diagram commutes:

The maps, F and G, are given by their respective adaptive path integrals, meaning partition functions defined by the respective multi-phase and multi-path (multi-field and multi-geometry) transition amplitudes:

Here, the Lebesgue integration, in both integrals, is performed over all continuous = paths + fields + geom/topologies, while summation is performed over all discrete random processes (i.e., Markov chains) and regional network-topologies, .

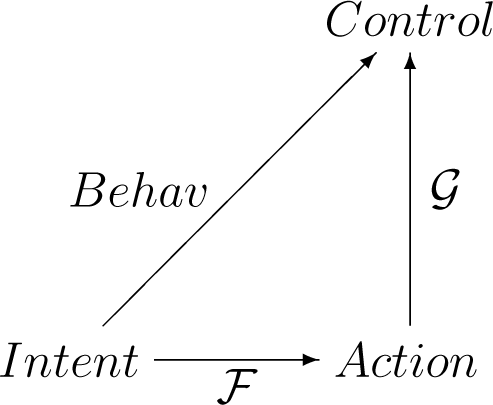

The path integral Equations (17) and (18) are constructed, respectively, from the classical behavioral actions (see [3]):

(where the overdot denotes the time derivative) and their corresponding action principles:

These correspond to classical Euler–Lagrangian equations of motion on the configuration manifold, M, with the Riemannian metric tensor, gij:

with Lagrangians:

The symbolic differentials, D[wΦ] and D[ωΨ], in the path integral Equation (17) represent adaptive path measures, defined as the weighted products:

Both adaptive path integral Equations (17) and (18) represent arbitrary topology ∞-dimensional neural networks (see [10]), with weights (w, ω) updated according to:

where Innovw(t) and Innovω(t) are their respective innovations.

4. Local Geometric View on CESO

A complexified extension of the behavioral action Equation (19), which is more appropriate for general Lorentzian path integrals, is called the Kähler-Perelman entropic action, W(g), that is, roughly speaking, a complexified Einstein–Hilbert action from general relativity. In this section, we present its time-evolution along the complex geometric dynamics, called the Kähler-Ricci flow. For this objective, we utilize the richest and most useful structure in the Kähler geometry, the so-called Fano manifold, which is a compact (i.e., closed and bounded) Kähler n-manifold (M, g) with positive first Chern class c1(M).

Recall that a Kähler manifold (M, g) is a complex n-manifold that has the following basic characteristics (for a recent review, see [11] and the references therein):

- (1)

A set of n local holomorphic coordinates: {z1, · · ·, zn} ∈ U ⊂ M, with the corresponding complex-valued differentials:

- (2)

Hermitian metric tensor: , with the corresponding Kähler metric, g, as a positive and symmetric (1,1)-form:

and the associated Kähler form, ω, as a closed (dω = 0) and positive (1,1)-form:

- (3)

Functional space of Kähler potentials:

Now, consider the normalized Kähler–Ricci flow on a Fano n-manifold (M, g):

In a local open chart U ⊂ (M, g), starting from some smooth initial Kähler metric flow Equation (20) is given by:

We remark here that of central importance in Kähler geometry are the so-called Dolbeault differential operators: ∂ ≡ ∂j and Any p-form α defined on the Kähler manifold (M, g) is called -closed iff and -exact iff for some (p − 1)-form η on (M, g). The Dolbeault cohomology group (M, ℝ) is a complexification of the standard de Rham cohomology group (M,ℝ), defined on (M, g) as a quotient:

It was proven in [12] that Equation (20) has a global solution, g(t), in the case that g0 has canonical Kähler class, i.e., 2πc1(M) as its Kähler class. In particular, by the -lemma, there exists a family of real-valued functions, u(t), called Ricci potentials (see, e.g., [13]) of the metric, g(t), which are special Kähler potentials. They are determined by:

Where denotes the volume of the Kähler–Ricci flow. (The corresponding evolutions of the Ricci curvature and the scalar curvature R = R(t) on the Kähler manifold (M, g) are governed, respectively, by:

starting from some smooth initial Ricci and scalar curvatures, (0) and R(0), respectively.)

From the control-theory perspective, the most important characteristic of the Kähler-Ricci flow is the existence of its solitary solutions (solitons), which are shrinking or decaying in time. This characteristic is associated with the geometrical entropy decrease and gives the global Lyapunov stability to the flow.

Formally, a Riemannian manifold (M, g) represents a shrinking Ricci soliton iff (see, e.g., [13]):

In particular, if (M, g) is a Fano manifold with g ∈ 2πc1(M), it is a shrinking Kähler-Ricci soliton iff λ = 1 and u ≡ u(t) is the Ricci potential; that is, iff (see [11] and references therein):

or, applying the Bianchi identity, iff the following Schur-identity holds:

For any Kähler metric, g ∈ 2πc1(M), with scalar curvature s and any smooth real time-function u ≡ u(t), define the Kähler–Perelman entropy E ≡ E(g) defined by the following infimum of sets of entropy functionals (compare with Perelman’s original definition [14]):

A smooth minimizer of the entropy, ε, always exists, though it need not necessarily be unique (see [15]). Entropy ε admits a natural upper bound:

To see how the Kähler–Perelman entropic action, W(g, u), evolves under the Kähler-Ricci flow (20), recall that for any solution, u(t), of the backward heat equation (compare with [14]):

we have:

which implies the Kähler-Perelman monotonicity condition on the geometric entropy [11]:

Roughly speaking, the application of control is trying to reduce the system’s entropy. This is achieved through shrinking Kähler-Ricci solitons.

5. Computational View on CESO

In this section, we will outline a fast desktop simulation framework for controlled entropic self-organization, based on the preceding idea of the functional composition of path-integrals Equations (17) and (18).

In quantum field theory, there is both a theoretical and a numerical approach to solve a similar path integral as a sum-over-fields. A theoretical way consists of its perturbative expansion into a series of Feynman diagrams; although there is a Mathematica® package, FeynArts–FeynCalc, devoted to this, this approach does not scale well with respect to increasing numbers of agents and, therefore, is not well suited to our task. A numerical way of handling the problem might be to discretize a path integral on a lattice (of dimension two, three or four) and use the techniques of lattice gauge theory; again, although possible, this approach is not really feasible for the numbers of agents in which we are typically interested. (We remark that military command and control (C2) can involve hundreds of actors, and it is not unreasonable to expect that other non-military decision-making processes could involve similarly large numbers.)

In non-relativistic quantum mechanics, the path integral can be numerically solved, either by a direct implementation of the Feynman formula (see, e.g., [16]) or by Monte Carlo methods. Both these kinds of solvers are fairly slow, except for the Metropolis algorithm [17]. As shown in Feynman’s first paper [18], this path integral is equivalent to the linear Schrödinger equation. Its adaptive version is equivalent to the cubic nonlinear Schrödinger equation (NLS; see [19] and the references therein).

By analogy, in statistical mechanics, the real path integral in real time is equivalent to the linear Fokker-Planck equation, while its adaptive version is equivalent to the nonlinear Fokker-Planck equation. This approach is developed in the next subsection.

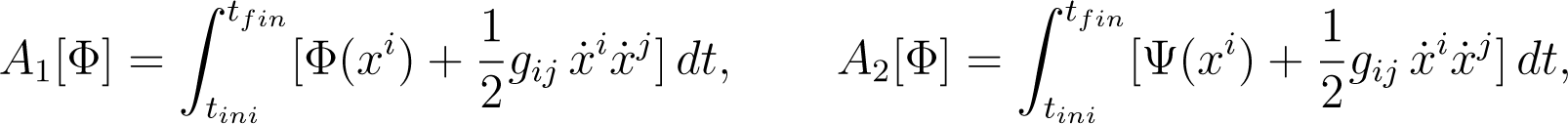

5.1. Fokker–Planck–Ito Approach

The Fokker–Planck equation, also known as the Kolmogorov forward equation:

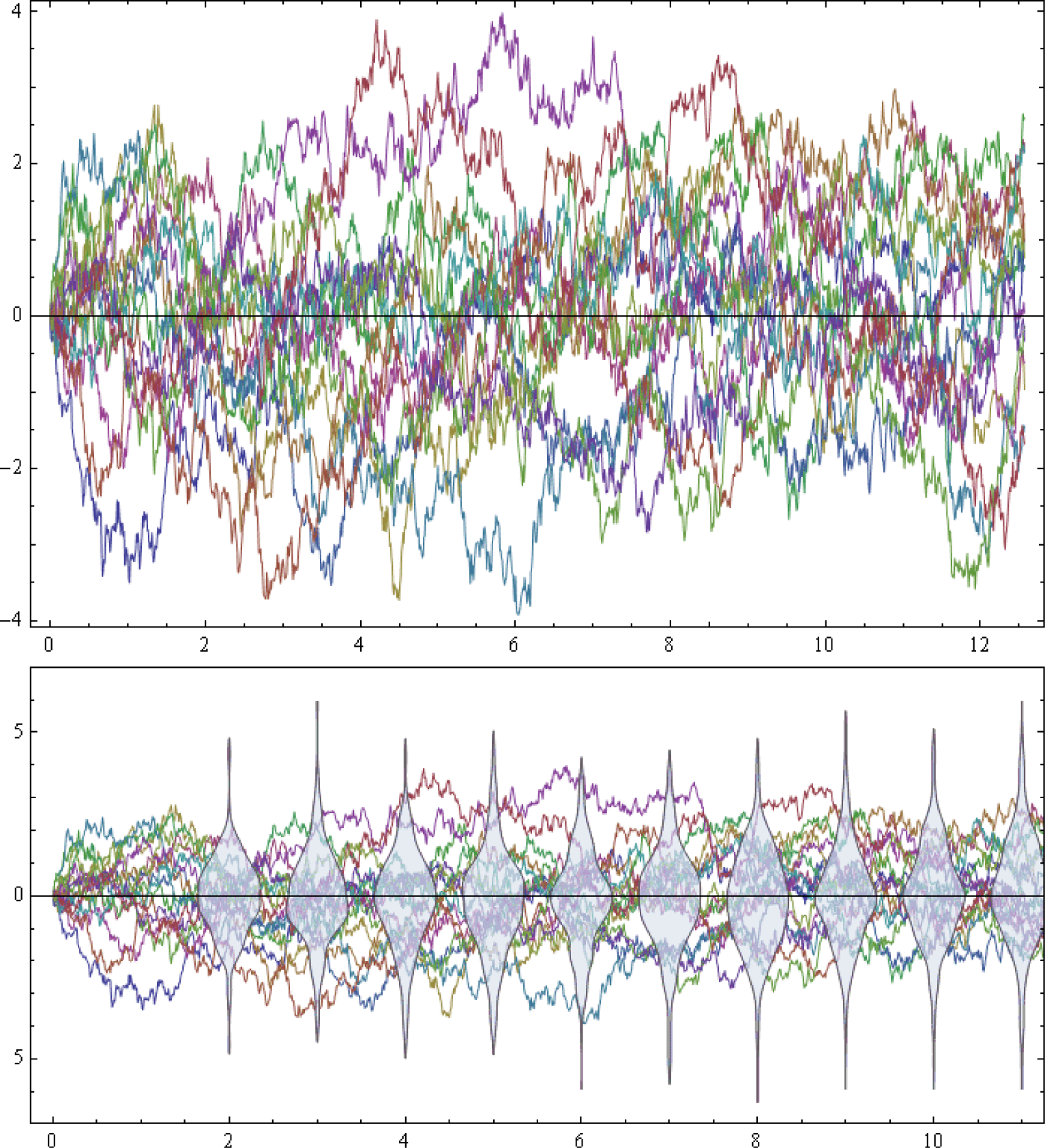

is a parabolic partial differential equation (PDE) that describes the time-forward evolution of the probability distribution, P (x, t), also called the probability density function (PDF; see Figure 1). The expression on the right-hand side of Equation (23), including:

defines the Fokker-Planck differential operator:

Note that the quadratic diffusive term vanishes in the case of zero noise g(x, t) = 0.

The PDE (23) satisfies the probability conservation law:

where J(x; t) represents the probability current, defined as:

(Note that the backward Fokker–Planck equation or the Kolmogorov backward equation:

describes the time-backward evolution of the PDF, P (x, t). It corresponds to the same SDE (25). Equation (24) is an evolution PDE with the formal adjoint of the Fokker–Planck differential operator:

The Fokker–Planck PDE (23) corresponds to the Ito stochastic differential equation (SDE, with deterministic drift, f(Xt, t), and random diffusion g(Xt, t) (which describes the coupling of the drifting particle with the heat bath)):

where Xt is the Ito stochastic process (a solution to the SDE (25)). Wt is the Wiener process (or Brownian motion), also known as the red noise, because its time derivative, dWt/dt, represents the white noise.

As a simple demonstration case for the statistical path-integral simulation, we have implemented the following Ito-type SDE with the nonlinear drift: f(Xt, t) and the vector Wiener process , which includes plane waves (sine and cosine), as well as soliton-type sech waves and shock-wave-type tanh waves (see Figure 2). Thus:

where:

6. Conclusions

In this paper we have presented several different, but complimentary, modelling and computational views of controlled entropic self-organization (CESO). Motivated by the notion of perceptual error from perceptual control theory (PCT) in the setting of group decision making, we have proposed several modelling and simulation frameworks aimed at illuminating controlled self-organization phenomena: (i) a physical view of partition functions and path integrals, as well as entropy and phase transitions; (ii) a global view of functional compositions and commutative diagrams; (iii) a local view of the Kähler–Ricci flow and time-evolution of entropic action; and, finally, (iv) a computational view of various path-integral approximations.

Note that we do not intend these views of CESO to constitute any kind of normative ideal; our underlying formulation of group decision-making according to the PCT hypothesis, that actors make choices, so as to minimize their perceptual error, is merely a modelling construct, not necessarily an accurate representation of human behavior. Yet, we maintain that this construct, embodied in our four formal frameworks, provides powerful new formal tools and the basis for new empirical insights, thereby laying the foundation for other CESO models in our ongoing and future research.

Acknowledgments

The authors would like to acknowledge the support of Dr Todd Mansell, Chief Joint and Operations Analysis Division, DSTO, for his support of this work.

Author Contributions

Jason Scholz conceived of the problem of modelling military C2 in terms of a Perceptual Control Theory construction, concerning the perceptual errors of each agent, and that minimizing the perceptual error would indicate an optimal behavior. His main contribution is the problem motivation (perceptual control and neurological modelling), construction of the perceptual error optimization, with the possibility of generalization to Markov random fields. Darryn Reid developed the research programme, by lifting the construction to more general settings, defining entropy as central to its explanatory power, and outlining the need for a computational view using path-integral approximations. Vladimir Ivancevic is responsible for the largest part of the mathematical development of these ideas, including the adaptive path-integral formalism, its relation to Kähler geometry and Kähler-Ricci flow, and their computer simulations.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Powers, W.T. Feedback: Beyond behaviorism. Science 1973, 179, 351–356. [Google Scholar]

- Powers, W.T. Behaviorism and feedback control. Science 1973, 181, 1118–1120. [Google Scholar]

- Ivancevic, V.; Reid, D.; Aidman, E. Crowd behavior dynamics: Entropic path-integral model. Nonlinear Dyn 2010, 59, 351–373. [Google Scholar]

- Moussouris, J. Gibbs and Markov random systems with constraints. J. Stat. Phys 1974, 10, 11–33. [Google Scholar]

- Landau, L.D.; Lifshitz, E.M. Statistical Physics, 3rd ed; Butterworth–Heinemann: Oxford, UK, 1996. [Google Scholar]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar]

- McCullough, W.S.; Pitts, W.H. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys 1943, 5, 115–133. [Google Scholar]

- Ivancevic, V.; Ivancevic, T. Complex Nonlinearity: Chaos, Phase Transitions, Topology Change and Path Integrals; Springer: Berlin, Germany, 2008. [Google Scholar]

- Nicolis, G.; Prigogine, I. Self–Organization in Nonequilibrium Systems: From Dissipative Structures to Order through Fluctuations; Wiley: New York, 1977. [Google Scholar]

- Ivancevic, V.; Ivancevic, T. Neuro–Fuzzy Associative Machinery for Comprehensive Brain and Cognition Modelling; Springer: Berlin, Germany, 2007. [Google Scholar]

- Tian, G.; Zhang, Z. Regularity of Kähler-Ricci flows on Fano manifolds 2013, arXiv, math.DG/1310.5897.

- Cao, H.D. Deformation of Kähler metrics to Kähler-Einstein metrics on compact Kähler manifolds. Invent. Math 1985, 81, 359–372. [Google Scholar]

- Morgan, J.W.; Tian, G. Ricci Flow and the Poincaré Conjecture. Clay Math. Monogr 2007, arXiv, math/0607607. [Google Scholar]

- Perelman, G. The entropy formula for the Ricci flow and its geometric applications 2002, arXiv.

- Rothaus, O. Logarithmic Sobolev inequality and the spectrum of Schrödinger operators. J. Funct. Anal 1981, 42, 110–120. [Google Scholar]

- Ivancevic, V.; Ivancevic, T. Quantum Neural Computation; Springer: Berlin, Germany, 2009. [Google Scholar]

- Metropolis, N.; Rosenbluth, A.; Rosenbluth, M.; Teller, A.; Teller, E. Equation of state calculations by fast computing machines. J. Chem. Phys 1953, 21, 1087. [Google Scholar]

- Feynman, R.P. Space–time approach to nonrelativistic quantum mechanics. Rev. Mod. Phys 1948, 20, 367–387. [Google Scholar]

- Ivancevic, V.; Reid, D. Turbulence and shock-waves in crowd dynamics. Nonlinear Dyn 2012, 68, 285–304. [Google Scholar]

© 2014 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Ivancevic, V.; Reid, D.; Scholz, J. Action-Amplitude Approach to Controlled Entropic Self-Organization. Entropy 2014, 16, 2699-2712. https://doi.org/10.3390/e16052699

Ivancevic V, Reid D, Scholz J. Action-Amplitude Approach to Controlled Entropic Self-Organization. Entropy. 2014; 16(5):2699-2712. https://doi.org/10.3390/e16052699

Chicago/Turabian StyleIvancevic, Vladimir, Darryn Reid, and Jason Scholz. 2014. "Action-Amplitude Approach to Controlled Entropic Self-Organization" Entropy 16, no. 5: 2699-2712. https://doi.org/10.3390/e16052699